Week 2 Update

- Bradley Schulz

- Jan 23, 2023

- 2 min read

Classifying shapes!

Goals from This Past Week

Team goals: Run a first iteration of our respective code assignments.

Personal goals: Have a CNN on Google Colab that can successfully classify the shape present on a given card. A stretch goal is to also identify the number of shapes on that card.

Accomplishments

I realized that a lot of the preprocessing needs to happen before classifying the shape. It also overlaps with a lot of the preprocessing that my teammate Tyler is doing. So, we decided to each work on preprocessing on our own and then combine our best results together.

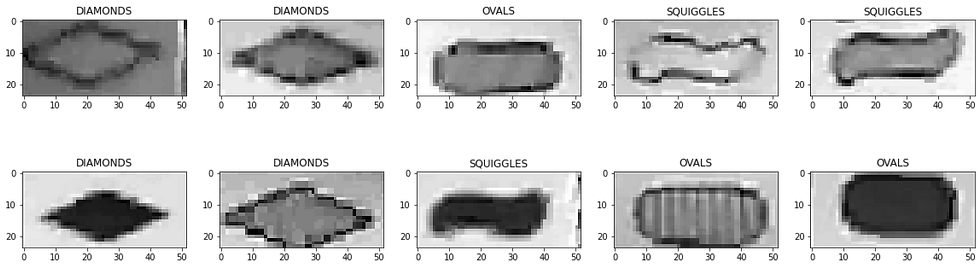

To minimize the amount of redundant work, I decided to take a break from preprocessing and just work on shape classification given a filled in shape. I am assuming that prior code (from Tyler) has already isolated each card, meaning my code receives a 60x90 pixel image corresponding to one card.

I also realized that a CNN may not be the best approach, as I can use only computer vision techniques to classify each card. By applying only relevant algorithms, I can make the system simpler by avoiding the numerous computations in a CNN.

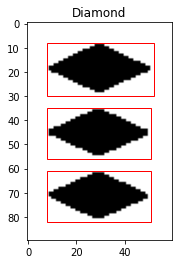

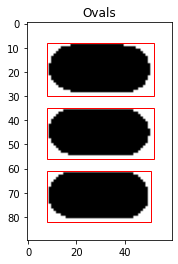

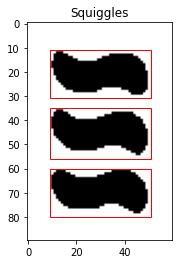

I wrote code to identify the individual shapes in each image and draw bounding boxes around each shape. To make sure the shapes are correctly found, I only select shapes with an area between 200 and 1000 pixels in the original image (out of 5400). Because the size of each input card image is standardized, it is okay to use these hard-coded values.

From there, I extract the shape into a 40x20 rectangle. I calculate the area of the shape, and use that area to classify the image. I found that the ranges for the areas of the training images I used are mutually exclusive. Measured in pixels, these areas are:

Shape | Min Area | Max Area |

Diamond | 364 | 417 |

Squiggle | 534 | 559 |

Oval | 623 | 669 |

There are a few more strategies I want to play around with. These include:

Averaging all the shapes on a card

Using dilation and erosion to get cleaner lines

Considering the perimeter of the shape as a redundant check

Testing the similarity of the test shape against a test model. This would be computationally intense since every shape would need to be compared against the 3 possible shapes

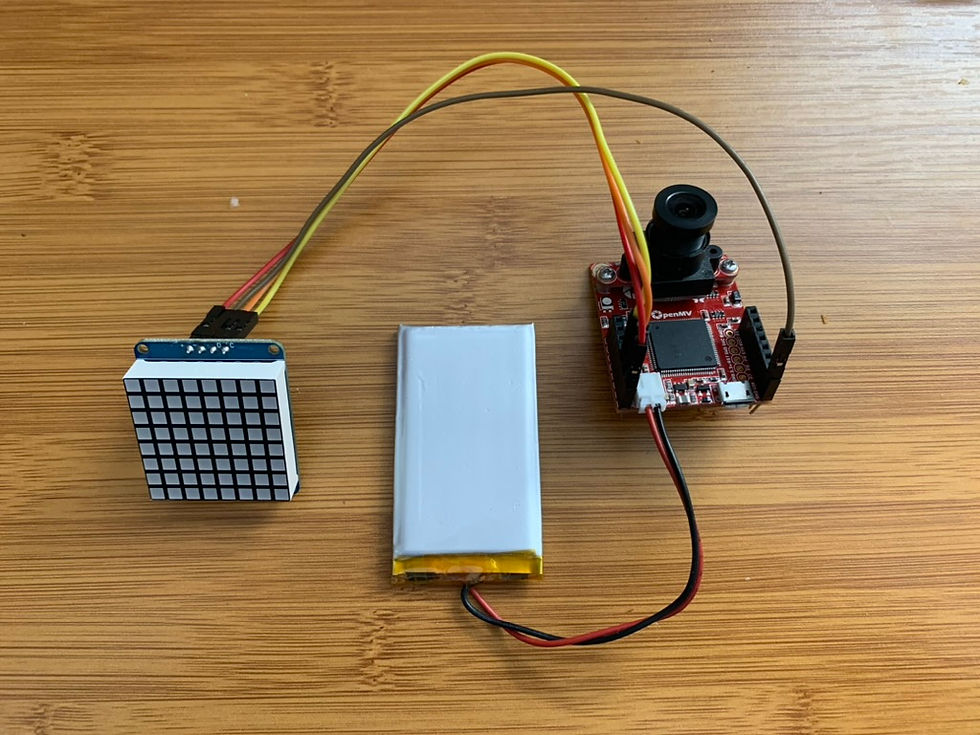

However, I want to keep in mind that this will be operating on an embedded system, so adding more processing techniques may slow the system down. In the end, I expect to go with the simplest system which I currently am using.

Goals for Next Week

Team goals: Work on pre-processing so we have a standardized way of reducing noise in the cards.

Personal goals: Be able to classify the shape, number of shapes, and fill of a card.

Awesome work Bradley! I wasn't expecting the shape areas to be so cleanly mutually exclusive, that makes shape classification much easier. Quick question about the max and min areas you measured: were those numbers obtained from checking the areas of all the cards in the training set, or only a handful? I'm also curious if the numbers will change at all since our 60x90 size will be a bit different from the training data.